In 2025, AI safety made meaningful technical progress, but advances in model capabilities continued to outpace our ability to fully understand and control them. Breakthroughs in interpretability and monitoring revealed both new opportunities and serious vulnerabilities, while real-world evidence of misalignment pushed the field toward AI control and responsible scaling.

Read post

Together, Part I and Part II of The Year in AI — Best of 2025 show how AI crossed a new threshold in both reasoning and vision. From reasoning LLMs and agentic systems to flow-matching diffusion, Gaussian splatting, and generative video, 2025 marked the shift from experimental models to scalable, real-world AI. Read the details in Part 1 and Part 2 to explore the full scope of these breakthroughs.

Read post

This post explores a surprising discovery in modern reasoning models: seemingly meaningless words like “Hmm,” “Wait,” or “I apologize” often act as control signals that shape how a model thinks, backtracks, or refuses. Drawing on recent research, it shows how these ordinary-looking tokens can function as mode switches - structurally load-bearing elements that influence reasoning quality, test-time compute, and even safety behavior.

Read post

Language models are starting to break free from the limits of word-by-word prediction, stepping into continuous latent spaces where they can plan, reason, and represent meaning more like humans do. This article dives into the breakthrough approaches enabling this shift—from concept-level modeling to latent chain-of-thought. The result is a glimpse at a new generation of AI that thinks before it speaks.

Read post

In just three years, AI has gone from fumbling over basic math to proving new theorems. This post explores how GPT-5 and systems like DeepMind’s AlphaEvolve are transforming mathematical discovery from extending probability theory to contributing key insights in quantum complexity- marking the dawn of AI as a true research collaborator.

Read post

DeepMind has built an AI “co-scientist” that automates parts of the research process by generating, testing, and refining code like a tireless grad student. It’s already outperforming human baselines in multiple scientific domains, offering a glimpse into how AI could accelerate discovery.

Read post

Meta FAIR’s new Vision Language World Models (VLWM) take a fresh approach to AI planning: instead of predicting pixels or manipulating abstract vectors, they describe future states and actions in plain English. This makes plans interpretable, editable, and easier to trust, bridging the gap between perception and action. VLWM uses a pipeline of video-to-text compression (Tree of Captions), iterative refinement, and a critic model to evaluate alternative futures, achieving state-of-the-art results in visual planning benchmarks. While challenges remain—like text fidelity, dataset quality, and limits on fine-grained control—VLWM marks a major step toward human-AI collaborative planning.

Read post

The Hierarchical Reasoning Model (HRM) is a brain-inspired architecture that overcomes the depth limitations of current LLMs by reasoning in layers. Using two nested recurrent modules—fast, low-level processing and slower, high-level guidance—it achieves state-of-the-art results on complex reasoning benchmarks with only 27M parameters. HRM’s design enables adaptive computation, interpretable problem-solving steps, and emergent dimensional hierarchies similar to those seen in the brain. While tested mainly on structured puzzles, its efficiency and architectural innovation hint at a promising alternative to brute-force scaling.

Read post

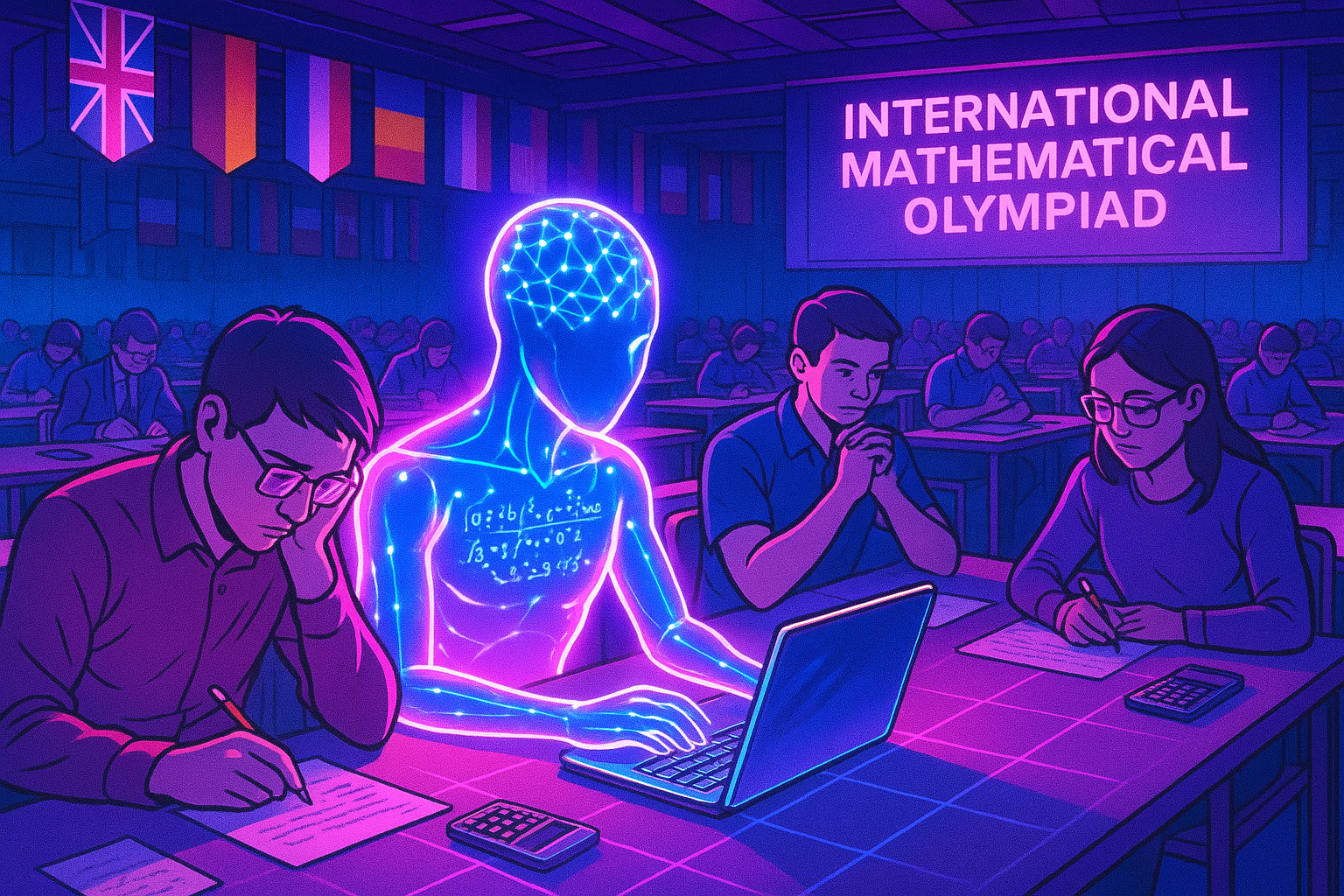

At the 2025 International Mathematical Olympiad, AI models from OpenAI and DeepMind achieved gold-medal level performance, solving 5 out of 6 challenging math problems. This historic milestone marks a turning point in AI’s ability to reason creatively, not just compute. The article traces the evolution from formal theorem provers to language-based models, exploring benchmark controversies and how extended deliberation unlocked new capabilities. It also examines the broader implications for math education, research, and the future of machine reasoning.

Read post

AI in 2025 is quietly evolving, not through flashy new models, but via deep integration and engineering advances. This article explores how recursive self-improvement, reasoning models, and infrastructure breakthroughs may be laying the groundwork for artificial general intelligence (AGI). We may be entering the "plumbing phase" of AGI - less hype, more substance.

Read post

Modern LLMs can ace Olympiad math yet stumble over toddler-level riddles, creating a “jagged frontier” where brilliance and blunders sit side by side. This article dissects three case studies—Salesforce’s SIMPLE benchmark, IBM-led Enterprise Bench, and Apple’s hotly debated “Illusion of Thinking” paper—to show why today’s AI is both a breakthrough and a liability in waiting.

Read post

AI systems are no longer just assisting with research—they’re conducting it. From drug discovery to publishing peer-reviewed papers, tools like Robin and Zochi are reshaping the scientific method itself.

Read post

Hallucinations in LLMs aren’t just random mistakes—they often stem from identifiable internal patterns. This article explains how new interpretability tools are helping researchers trace and potentially control these behaviors.

Read post

Reward models are the backbone of modern LLM fine-tuning, guiding models toward helpful, honest, and safe behavior. But aligning AI with human values is harder than it looks—and new research is pushing reward modeling into uncharted territory.

Read post

.png)

Transformers have powered the rise of large language models—but their limitations are becoming more apparent. New architectures like diffusion models, Mamba, and Titans point the way to faster, smarter, and more scalable AI systems.

Read post

As AI systems move beyond language into reasoning, infrastructure demands are skyrocketing. Apolo offers a secure, scalable, on-prem solution to help enterprises and data centers stay ahead in the age of near-AGI.

Read post

Custom GPT models enhance communication, strengthen data security, and streamline operations while addressing industry-specific challenges. They unlock insights, ensure compliance, and improve efficiency, helping businesses cut costs and stay competitive in an AI-driven world.

Read post

LLMs are powerful but struggle with context retention, reasoning, input sensitivity, and bias, sometimes producing incoherent or incorrect responses. Human oversight is crucial for accuracy and responsible AI deployment.

Read post

The experiment evaluated GPT-4’s performance on CPUs vs. GPUs, finding comparable accuracy with a manageable increase in training time and inference latency, making CPUs a viable alternative.

Read post

Andrej Karpathy’s talk on GPT-4 covered prompt engineering, model augmentation, and finetuning while stressing bias risks, human oversight, and its versatility.

Read post

Meta’s I-JEPA, introduced by Yann LeCun, learns internal world representations instead of pixels. It excels in low-shot classification, self-supervised learning, and image representation, surpassing generative models in accuracy and efficiency.

Read post

Meta’s Llama 2, an open-source LLM, supports research and commercial use with 7B to 70B parameters. Developed with Microsoft, it’s optimized for dialogue and integrates with Azure AI, AWS, and Hugging Face.

Read post

Despite GPT-4’s 128k token window, performance drops after 25k tokens, increasing costs. RAG strategies improve efficiency and manageability.

Read post

RAG systems struggle with accuracy, retrieval, and security. Optimizing data, refining prompts, and adding safeguards improve performance and reliability.

Read post

Aaren merges Transformers' efficiency with RNNs' adaptability, optimizing memory and computation while matching Transformer performance.

Read post

AI is revolutionizing data centers, driving real revenue through faster, smarter infrastructure. theMind’s multi-tenant MLOps platform helps businesses maximize AI’s potential for tangible outcomes.

Read post

AI and ML drive automated testing, anomaly detection, and predictive maintenance, while digital twins enhance network efficiency and scalability for 5G and cloud.

Read post

As data center energy demands rise, nuclear power provides a sustainable, reliable solution. Unlike renewables, it ensures consistent power, and SMR advancements enhance safety for a greener future.

Read post

As data centers demand more energy, sustainability is key. This blog explores cooling, renewables, and AI optimization for efficiency. Learn how theMind supports greener data centers.

Read post

Data centers face rising rack density from AI and HPC demands, outpacing traditional cooling and power systems. Discover how liquid cooling is shaping future efficiency.

Read post